- March 19, 2024

- Posted by: Anisha P

- Categories: GCP, Snowflake

Introduction:

Snowpipe enables loading data from files as soon as they’re available in a stage. This implies that instead of manually running COPY statements on a schedule to load larger batches, you may load data from files in micro-batches and make it available to users in a matter of minutes.

This topic explains how to use Google Cloud Pub/Sub messages for Google Cloud Storage (GCS) events to automatically trigger Snowpipe data loading.

Prerequisites:

- Snowflake account

- GCP service account

Steps to be followed:

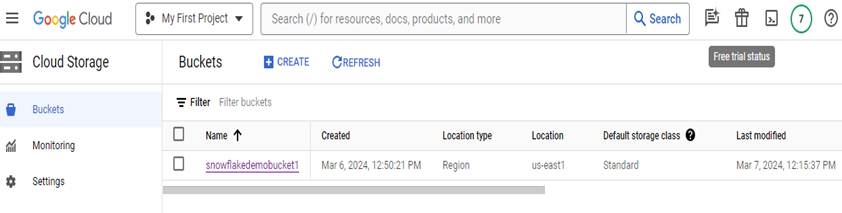

- Create GCP account and a storage bucket in the GCP account.

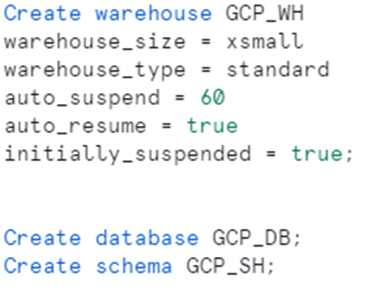

2. Open snowflake account and setup warehouse, database in which the data must be loaded.

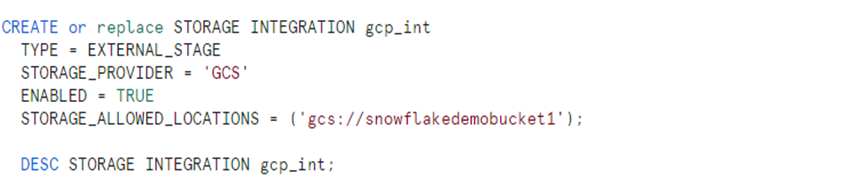

3. In Snowflake, create a storage integration for GCP and describe that storage integration to get a storage GCP service account.

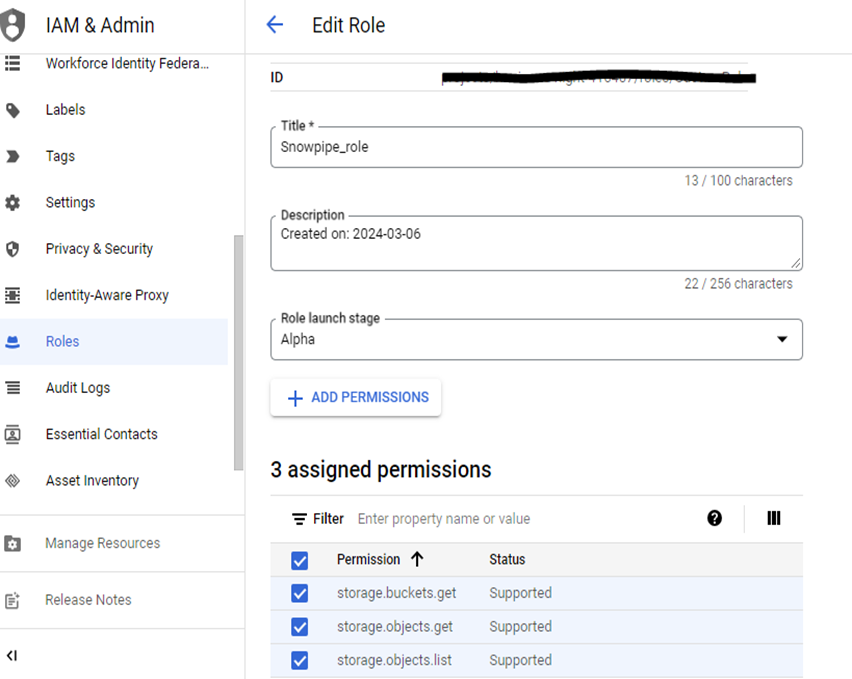

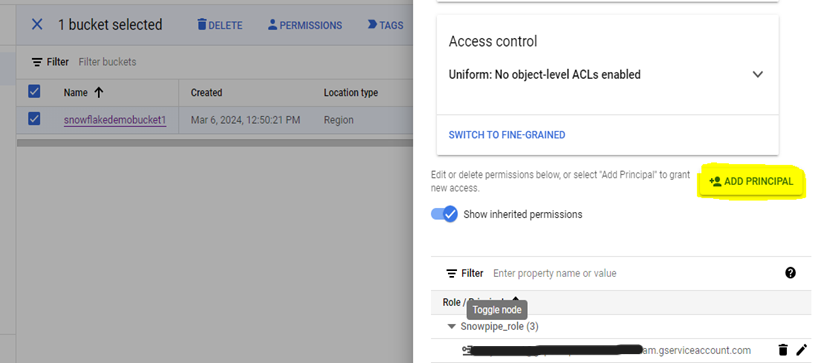

4. In GCP, navigate to IAM & ADMIN and select roles. Create custom role and add permissions for storage.buckets.get, storage.objects.get, storage.objects.list.

5. Next, select your bucket; on the right, you will see principals > click add principal; provide the service account name from the DESCRIBE Storage Integration output from Snowflake; and add the custom role that we created in the roles.

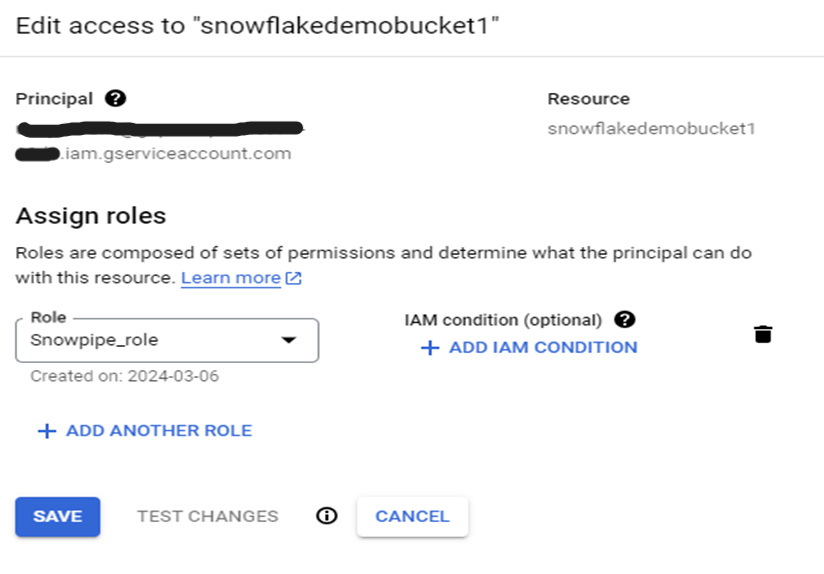

6. Next, to enable the Notification, open a cloud shell in GCP with the ID of your project and execute the following command “$ gsutil notification create -t snowpipe (new notification name) -f json gs://snowflakedemobucket1/ (gcs bucket path)”.

7. After executing the above command, the notification will be created successfully.

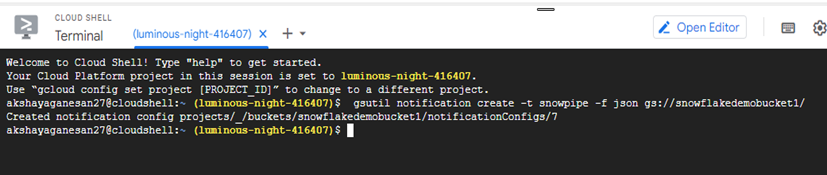

8. Open the pub/sub in the GCP and create a subscription under the created topic.

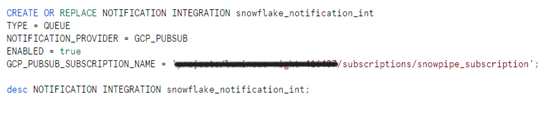

9. In Snowflake, create a notification integration with the created subscription name in GCP.

10. Desc notification integration and take the GCP Pub/Sub service account.

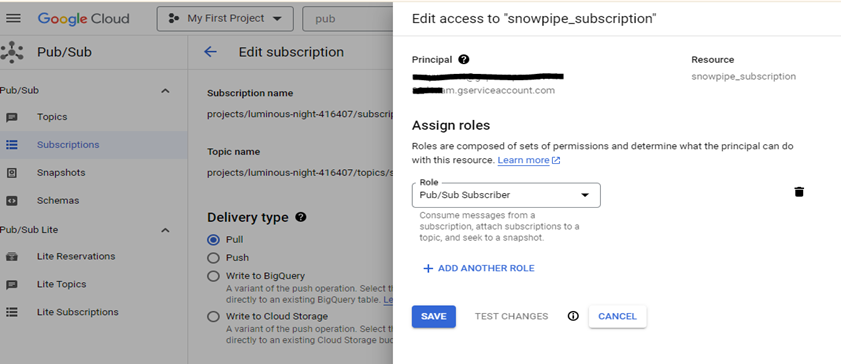

11. In GCP, add the principal to the Pub/Sub service account with the Pub/Sub subscriber role and click on save.

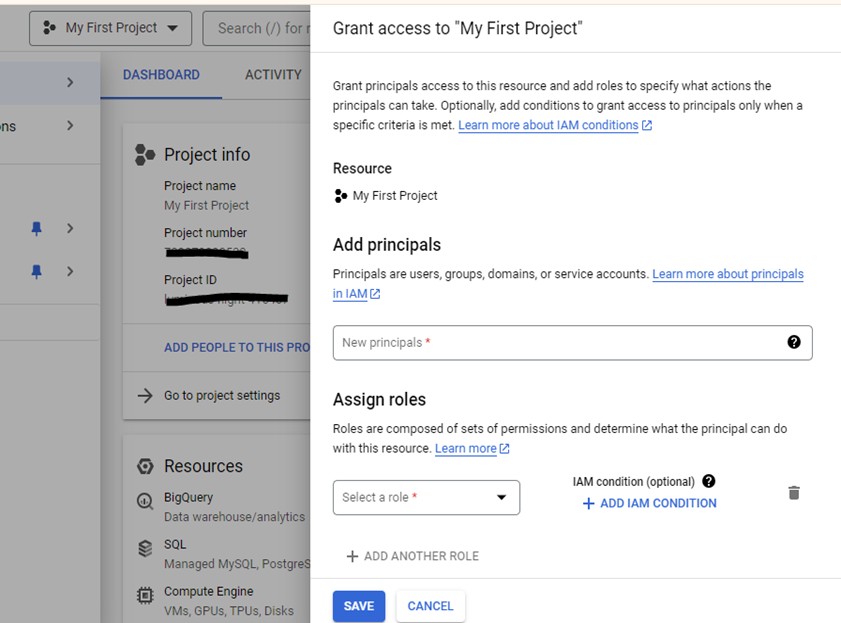

12. Further, on the project level, create a principal for the notification service account and add a role Monitor Viewer to the principal.

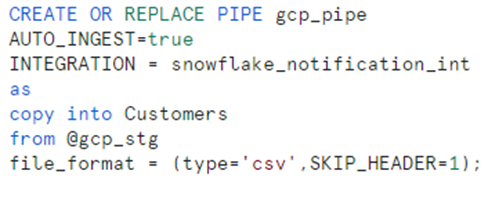

13. Create a snowpipe with the commands in the snowflake.

Conclusion:

As seen above, data can be efficiently loaded from a Google Cloud Storage (GCS) bucket using Snowpipe. This process ensures that data is continuously ingested in near real-time, leveraging Snowflake’s automated data ingestion capabilities. By adhering to each step outlined here, users can seamlessly integrate their data pipeline, thereby facilitating the smooth and reliable transfer of data from the GCS bucket into the Snowflake environment. This method not only enhances data accuracy but also streamlines the overall data management workflow.

Cittabase is a select partner with Snowflake. Please feel free to contact us regarding your Snowflake solution needs. Our snowflake solutions encompass a suite of services for your data integration and migration needs. We are committed to providing personalized assistance and support customized to your requirements.