- September 4, 2023

- Posted by: Sahana Ishwarya

- Category: Snowflake

Introduction:

In the realm of modern data management, Snowflake stands out as a cloud-based data warehousing platform known for its flexibility and scalability. Among its standout features, dynamic tables have emerged as a revolutionary approach to data manipulation. In this guide, we’ll dive deep into the concept of dynamic tables, explore their advantages, and understand how they can transform your data management workflows.

Understanding Dynamic Tables:

Dynamic tables are a groundbreaking feature within Snowflake that offer a paradigm shift in the way we manage and transform data. Unlike the traditional approach of creating separate tables for transformation and updates, dynamic tables enable us to define the target table itself as dynamic. This means that the target table specification includes SQL statements for performing transformations.

Key Benefits:

- Streamlined Transformations:

With dynamic tables, the process of loading data from a stage to a target table becomes more efficient. Transformations are specified directly within the dynamic table’s SQL statement, eliminating the need for a separate transformation step.

- Automatic Refresh Process:

Dynamic tables materialize query results and automatically update the table through regular refreshes. This includes both full and incremental refreshes, enhancing data currency and accuracy.

- Orchestration and Scheduling:

Dynamic tables manage the scheduling and orchestration of complex data pipelines. This automation simplifies the overall data processing workflow.

- Data Integrity:

Since the content of a dynamic table is defined by a query, it cannot be modified through traditional DML operations such as insert, update, or delete. This ensures data consistency and prevents accidental alterations.

Syntax and Implementation:

CREATE OR REPLACE DYNAMIC TABLE <name>

TARGET_LAG = ‘1 HOUR’ | ‘downstream’

WAREHOUSE = COMPUTE_WH

AS

SELECT <command>;

The implementation of dynamic tables involves the following components:

- Target Lag: Specifies the refresh interval. Using ‘downstream’ as a keyword enables manual data refresh.

- Warehouse: Utilized to allocate compute resources for the refresh process.

- SELECT Command: Contains the SQL transformations for the dynamic table.

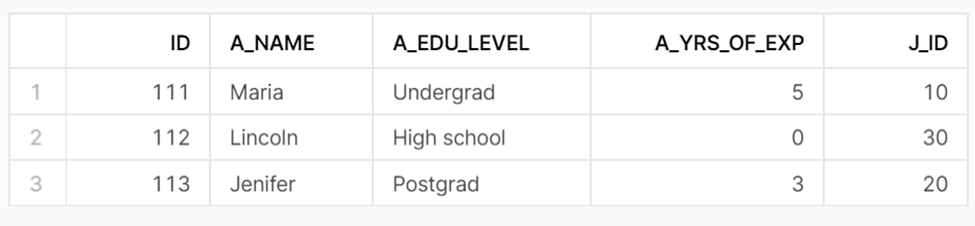

In the Snowflake environment, I have created STG_RAW_APPLICANTS and STG_RAW_JOBS tables to store applicant and job data, respectively.

Staging Tables

SELECT * FROM STG_RAW_APPLICANTS;

SELECT * FROM STG_RAW_JOBS;

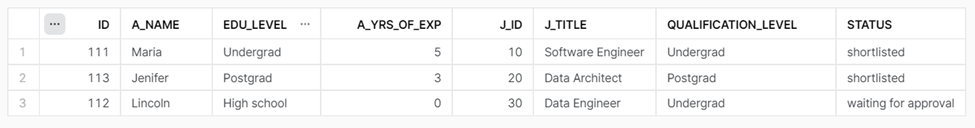

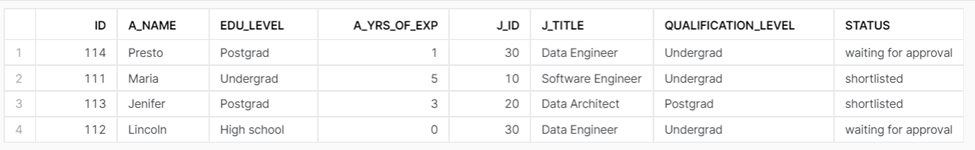

In this example, I have created a dynamic table named DT1_CANDIDATES that leverages the power of SQL queries to join applicant and job data. The status column categorizes candidates based on job requirements, reflecting the dynamic nature of the process.

Dynamic Table

CREATE OR REPLACE DYNAMIC TABLE DT1_CANDIDATES

TARGET_LAG = DOWNSTREAM

WAREHOUSE = COMPUTE_WH

AS

SELECT

ID, A_NAME, A.A_EDU_LEVEL AS EDU_LEVEL, A_YRS_OF_EXP,

J.J_ID, J_TITLE, J.J_EDU_LEVEL AS QUALIFICATION_LEVEL,

— To categorize applicant details based on job requirements

CASE WHEN A.A_EDU_LEVEL = J.J_EDU_LEVEL THEN ‘SHORTLISTED’ ELSE ‘WAITING FOR APPROVAL’ END AS STATUS

FROM STG_RAW_APPLICANTS A INNER JOIN STG_RAW_JOBS J ON (A.J_ID = J.J_ID);

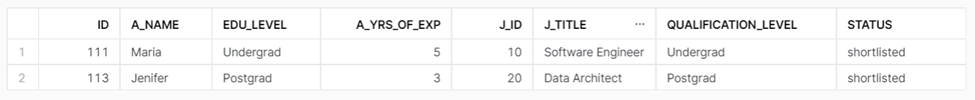

DT2_SELECTED_CANDIDATES table selects and materializes only the ‘shortlisted’ candidates from DT1_CANDIDATES. By cascading dynamic tables, I create a streamlined workflow that efficiently filters and processes data.

Dependent Dynamic Table

CREATE OR REPLACE DYNAMIC TABLE DT2_SELECTED_CANDIDATES

TARGET_LAG = DOWNSTREAM

WAREHOUSE = COMPUTE_WH

AS

SELECT * FROM DT1_CANDIDATES WHERE STATUS = ‘SHORTLISTED’;

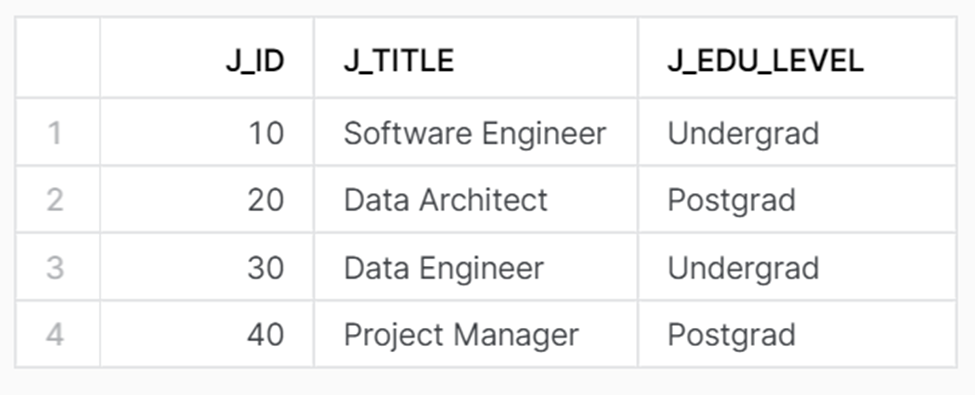

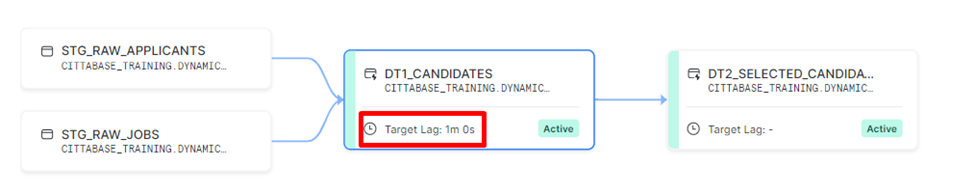

Dynamic Table Graph

The dynamic table graph feature in Snowflake provides insights into the flow of data transformations, dependencies, and refresh schedules, helping you manage and optimize your data workflows effectively.

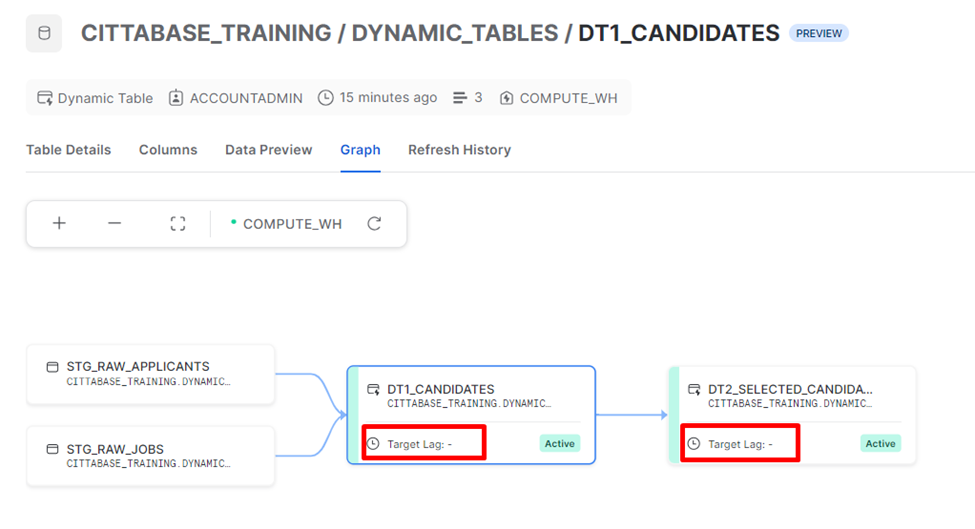

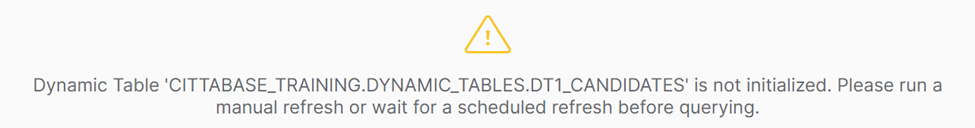

SELECT * FROM DT1_CANDIDATES;

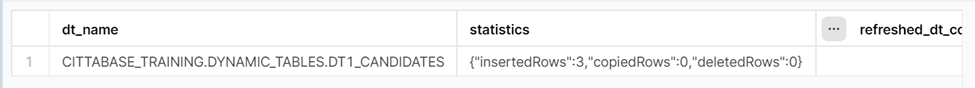

Manual Refresh

ALTER DYNAMIC TABLE DT1_CANDIDATES REFRESH;

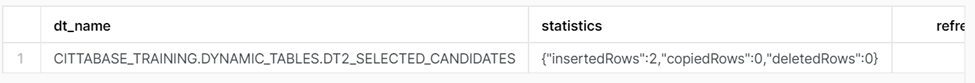

ALTER DYNAMIC TABLE DT2_SELECTED_CANDIDATES REFRESH;

SELECT * FROM DT1_CANDIDATES;

SELECT * FROM DT2_SELECTED_CANDIDATES;

Automatic Refresh

ALTER DYNAMIC TABLE DT1_CANDIDATES SET TARGET_LAG = ‘1 MINUTE’;

INSERT INTO STG_RAW_APPLICANTS (A_NAME, A_EDU_LEVEL, A_YRS_OF_EXP, J_ID) VALUES (‘PRESTO’, ‘POSTGRAD’, 1, 30);

SELECT * FROM DT1_CANDIDATES;

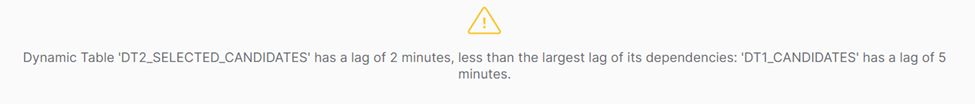

Time Order of Target Lag in Dependent Dynamic Table

ALTER DYNAMIC TABLE DT1_CANDIDATES SET TARGET_LAG = ‘5 MINUTE’;

ALTER DYNAMIC TABLE DT2_SELECTED_CANDIDATES SET TARGET_LAG = ‘2 MINUTE’;

When a dynamic table is established as reliant on another dynamic table, the refresh cycle of the reliant table is impacted by the refresh of the source table. The target lag for a dynamic table must not be set to a shorter duration than the target lag of the dynamic tables on which it is reliant.

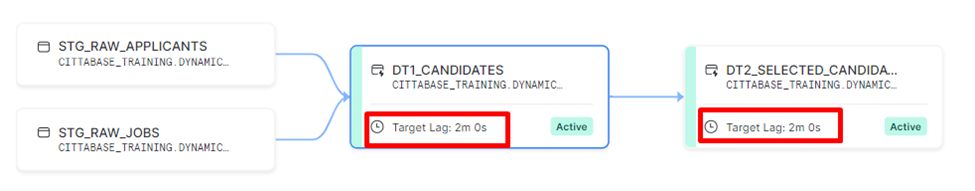

Refresh results in case of dependencies

ALTER DYNAMIC TABLE DT1_CANDIDATES SET TARGET_LAG = ‘DOWNSTREAM’;

ALTER DYNAMIC TABLE DT2_SELECTED_CANDIDATES SET TARGET_LAG = ‘2 MINUTE’;

In the scenario where DT1 is not subject to regular refreshes, and DT2 is dependent on DT1 with a specified lag, the refresh of DT1 occurs whenever an update is needed for DT2. The control over target lag allows you to manage the frequency of updates, ensuring that your data remains current.

Monitoring Dynamic Tables

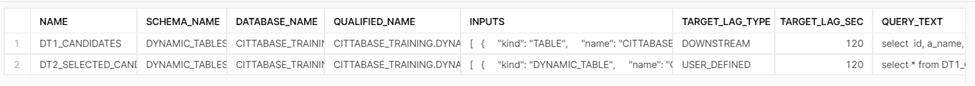

— to display history of dynamic tables, its dependencies, and its properties

SELECT * FROM TABLE(INFORMATION_SCHEMA.DYNAMIC_TABLE_GRAPH_HISTORY());

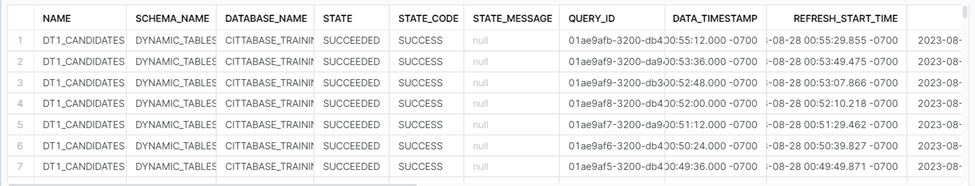

— History of refreshes of all dynamic tables

SELECT * FROM TABLE(INFORMATION_SCHEMA.DYNAMIC_TABLE_REFRESH_HISTORY(NAME => ‘DT1_CANDIDATES’));

— To identify refreshes that had errors

SELECT * FROM TABLE(INFORMATION_SCHEMA.DYNAMIC_TABLE_REFRESH_HISTORY(NAME => ‘DT1_CANDIDATES’, ERROR_ONLY => TRUE));

Limitations and Considerations:

- Table Types: Dynamic tables can be created on permanent and transient tables. Temporary tables within a session are not supported.

- Shared Objects: Dynamic tables cannot be created on shared objects. To work around this, any existing dynamic tables in a database must be dropped before sharing it.

- Materialized Views and External Tables: Dynamic tables do not support materialized views or external tables.

- View Dependencies: Dynamic tables can be created on top of views, but not on views defined from other dynamic tables.

- Unsupported Constructs: Certain query constructs, such as stored procedures, non-deterministic functions not listed in the ‘Non-Deterministic Functions Supported in Dynamic Tables’ category, external functions, and tasks, are not supported in dynamic tables.

Potential Challenges:

There are scenarios in which dynamic tables might encounter challenges:

- Renaming or altering columns may cause refresh failures.

- Applying clustering keys using ALTER commands can disrupt refresh processes.

- Directly applying masking policies during dynamic table creation works, but applying them later might require table recreation for successful refresh.

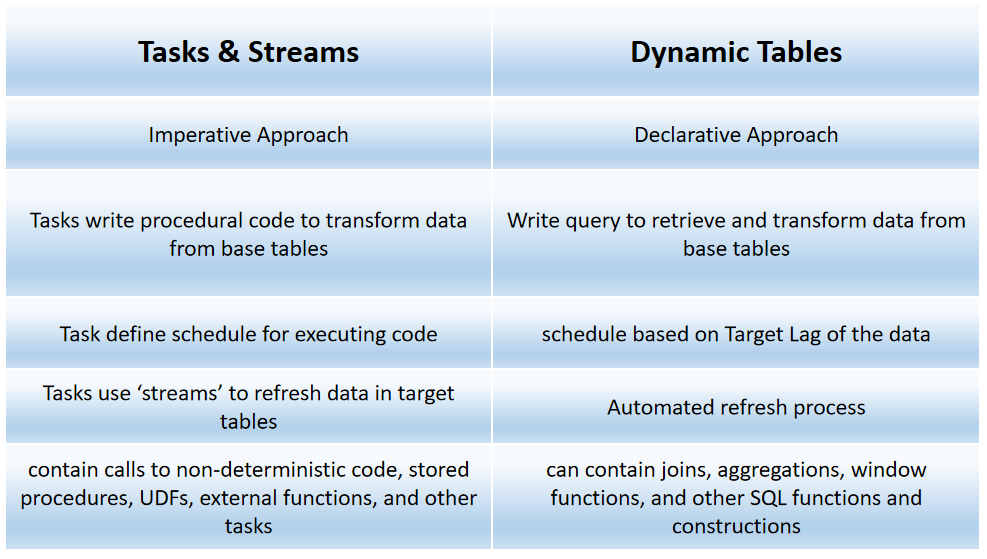

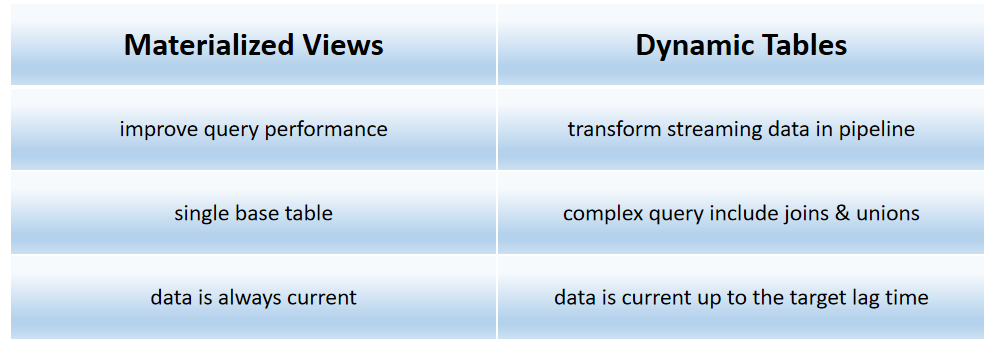

Dynamic Tables VS Streams & Tasks VS Materialized Views:

Conclusion:

Dynamic tables represent a leap forward in data management capabilities within Snowflake. By embedding transformations within the table structure and automating refresh processes, they offer increased agility, accuracy, and efficiency. While they come with certain limitations and considerations, dynamic tables remain a potent tool for modern data professionals seeking to streamline their workflows and make the most of Snowflake’s capabilities.

Please feel free to reach Cittabase for more information. Visit our blogs for more topics on Snowflake

Reference:

https://docs.snowflake.com/en/user-guide/dynamic-tables-about