- August 16, 2022

- Posted by: Vivekk J

- Categories: BDM/DEI, Informatica

Objective:

By the end of this article, you will be able to :

- Understand the purpose of Dynamic mapping

- Design, Configure and Implement Dynamic mapping in real time

- Compare similar services available across Informatica

Overview:

By definition, a Dynamic mapping should handle changes of Source objects, Target objects and transformation logic at run time. The ultimate purpose of this mapping is to avoid manual work while create/synchronize the source and target objects. This will reduce the time, effort and human errors while developing. The content of this blog has been produced based on the Informatica BDM/DEI version 10.5 (latest, at the time of writing this blog).

Business Use Case:

Using Dynamic mapping many use cases can be achieved, here are a few.

- Database to Database/Cloud/Hadoop Migration

- Initial historical data load while building a Data Warehouse/Data Lake from scratch

Design:

For this article we are going to consider use case of loading data from relational databases (Oracle, SQL Server and MySQL) to Hadoop Hive tables using dynamic mapping. Hive tables will be created at run time dynamically. This use case will be apt for Initial/Historical one time load where data needs to be migrated from multiple sources to a single destination (For instance, Operational Data Store/Rawlayer of Data Lake) for hundreds of tables.

Operation:

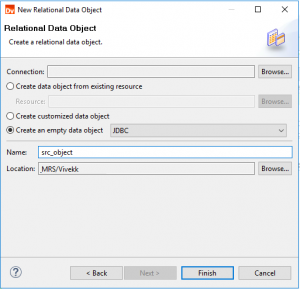

Create a source and target object and mapping in Developer tool.

Create a empty data object for source. Here we have selected JDBC connector which is used to connect different RDBMS instead of native connectors.

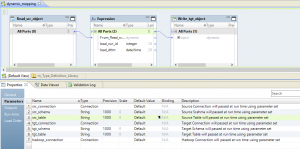

In Source Data Object property, select the check box for “At run time, get data object columns from the data source”. This helps to get the source object at run time.

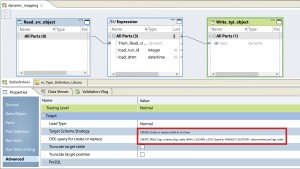

Create target object and select Link Type as “Link dynamic port based on the mapping flow”

To create target table at run time, Select Target Schema Strategy as ‘CREATE’ and we can use DDL at Advanced property as ‘CREATE TABLE <schema_name>.<table_name> ({INFA_COLUMN_LIST}) STORED AS PARQUET LOCATION “/user/warehouse/<table_name>”‘

Here the parameter values in mapping are kept as ‘Default’. These values will be passed at run time when the workflow starts.

This Dynamic mapping will be running in Hadoop Spark environment.

Configuration:

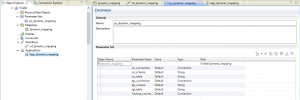

Parameter values can be passed at run time using parameter set or parameter values. In this article, we are going to proceed with Parameter set.

Create a Parameter Set and import the dynamic mapping so that all the parameter keys will be passed. Here the values will be ‘Default’ since the values will be passed at run time.

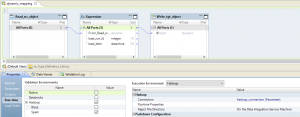

Create a Workflow with Command Task.

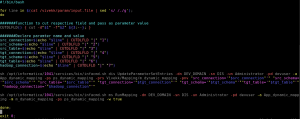

Command Task – Used to set the parameter values at run time and kick start the mapping using inbuilt infacmd utility.

Sample Script File:

Sample Input File:

![]()

Create a Application and select the Mapping and Parameter Set objects and deploy this application to Model Repository Service (MRS).

Comparing Dynamic Mapping Against Alternate Solution:

| Scenarios | Informatica DEI – Dynamic Mapping | Informatica Mass Ingestion | Informatica Data Synchronization |

| Can handle semi and unstructured data? | Yes | Yes | No – Suitable for Relation DB, Flat file, Sales force |

| Can do Insert, Update and Delete? | Partially – Based on Hive storage format | Partially – Only Truncate and Insert possible | Yes |

| Can run in Hadoop environment? | Yes | Yes | No – Only in secure native environment |

| Can control sequential and parallel load? | Yes | No – Parallel only possible | No – Only one table possible at a time |

| Can run in Spark and Blaze engine? | Yes | No – Only Map Reduce | No – Only in secure native environment |

Limitation:

Here are the few limitations that we have encountered.

- Dynamic mapping can’t handle MediumInt data type from MySQL Database.

- No option to concatenate dynamically all the incoming columns at once. For Instance, your use case is to take MD5 by concatenating all the incoming columns, this is not possible in Dynamic mapping.

Conclusion:

There are various ways in which a Dynamic mapping can help your business by reducing the cost, effort and human error, increases reusability and productivity. As a conclusion Dynamic mapping may be used where Data Synchronization and Mass Ingestion are having challenges in meeting the requirements.

Please feel free to comment and share your experience on Dynamic mapping if you would know any other limitations or features that are not mentioned here. We will be glad to respond/update the blog based on such feedback.